Sunday, July 20, 2025

Sunday, February 9, 2025

Arrested for deep seeking

Wednesday, October 23, 2024

Fatal exception - or how to get rid of errors

Dave, a good friend, sent me a WhatsApp message and made fun of a software that– when put under load - did not properly log invalid login attempts.

All he could see was “error:0” or “error:null” in the log-file.

This reminded me to a similar situation where we were hunting down the root causes of unhandled exceptions showing several times per day at customers out of the blue.

The log-files didn’t really help. We suspected a third party provider software component and of course the vendor answered that it cannot come from them.

We collected more evidence and after several days of investigation we could prove with hard facts that the cause was indeed the third party component. We turned off the suspected configuration and it all worked smoothly after that.

Before we identified the root cause, we were overwhelmed about the many exceptions found in the log-files. It was a real challenge to separate errors that made it to a user’s UI from those that remained silent in the background although something obviously went wrong with these errors, too. The architects decided the logs must be free from any noise by catching the exceptions and properly deal with it. Too many errors can hamper the analysis of understanding what’s really going wrong.

Two weeks later, the logfiles were free from errors. Soon after one of the developers came to me and moaned about a detection he just made. He stated someone simply assigned higher log-levels for most of the detected errors so these could not make it anymore into the logfiles. In his words, the errors were just swept under the carpet.

I cannot judge whether that was really true or whether it was done with just a few errors where it made sense, but it was funny enough to put a new sketch on paper.Wednesday, October 2, 2024

The Birthday Paradox - and how the use of AI helped resolving a bug

While working / testing a new web based solution to replace the current fat client, I was assigned an an interesting customer problem report.

The customer claimed that since the deployment of the new application, they noticed at least 2 incidents per day where the sending of electronic documents contained a corrupt set of attachments. For example, instead of 5 medical reports, only 4 were included in the generated mailing. The local IT administrator observed the incident to happen due to duplicate keys set for the attachments from time to time.

But, they could not evaluate whether the duplicates were created by the old fat client or the new web based application. Both products (old and new) were used in parallel. Some departments used the new web based product, while others still stick to using the old. Due to compatibility reasons, the new application inherited also antiquated algorithms to not mess up the database and to guarantee parallel operation of both products.

One of

these relicts was a four digit alphanumeric code for each document created in

the database. The code had to be unique only within one person’s file of

documents. If another person had a document using the same code, that

was still OK. The code (eg. at court) was used to refer to a specific document. It had to be easy to remember.

At first,

it seemed very unlikely that a person’s document could be assigned a duplicate code. And, there was a debate between the

customer and different stakeholders on our side.

The new web application was claimed to be free of creating duplicates but I was not so sure about that. The customer ticket was left

untouched and the customer lost out until we found a moment, took the initiative and developed

a script to observe all new documents created

during our automated tests and also during manual regression testing of other

features.

The script was executed every once an hour. We never had any duplicates until after a week, all of a sudden the Jenkins script alarmed claiming the detection of a duplicate. That moment was like Xmas and we were so excited to analyze the two documents.

In fact, both documents belonged to the same person. Now, we wanted to know who created these and what was the scenario applied in this case.Unfortunately,

it was impossible to determine who was the author of the documents. My test team claimed

not having done anything with the target person. The person’s name for which the duplicates were created occurred only once in

our test case management tool, but not for a scenario that could have explained

the phenomena. The userid (author of the documents) belonged to the product owner.

He assured he did not do anything with that person that day and explained that many other stakeholders could have used the same userid within the test environment where that anomaly was detected.

An appeal in the developer group chat did not help resolve the mystery either. The only theory in place was “it must have happened during creation or copying of a document”. The most easy explanation had been the latter; the copy-procedure.

Our theory

was that a copied document could result in assigning the same code to the new

instance. But, we tested that; copying documents was working as expected. The

copied instance received a new unique code that was different from its origin. Too easy anyway.

Encouraged to resolve the mystery, we asked ChatGBT about the likelihood of duplicates to happen in our scenario. The response was juicy.

It claimed an almost 2% chance of duplicates if the person had already 200 assigned codes (within his/her documents). That was really surprising and when we further asked ChatGBT, it turned out the probability climbed up to 25% if the person had assigned 2000 varying codes in her documents.

This result is based on the so called Birthday Paradox which states, that it needs only 23 random individuals to get a 50% chance of a shared birthday. Wow!

Since I am not a mathematician, I wanted to test the theory with my own experiment. I started to write down the birthdays of 23 random people within my circle of acquaintances. Actually, I could stop already at 18. Within this small set I had identified 4 people who shared the same birthday. Fascinating!

That egged us to develop yet another script and flood one exemplary fresh person record with hundreds of automatically created documents.

The result was revealing:

|

|

Number of assigned codes for 1 person |

||||

|

|

500 |

1000 |

1500 |

2000 |

2500 |

|

Number of identified duplicates (Run 1) |

0 |

0 |

5 |

8 |

11 |

|

Number of identified duplicates (Run 2) |

0 |

0 |

3 |

4 |

6 |

With these 2 test runs, we could prove that the new application produced duplicates if only we had enough unique documents assigned to the person upfront.

The resolution could be as simple as that:

- When assigning the code, check for existing codes and re-generated if needed (could be time-consuming depending on the number of existing documents)

- When generating the

mailing, the system could check all selected attachments and automatically

correct the duplicates and re-generate these or warn the user about the duplicates to correct it manually.

What followed was a nicely documented internal ticket with all our analysis work. The fix was given highest priority and made into the next hot-fix.

When people ask me, what I find so fascinating about software testing, then this story is a perfect example. Yes sure, often, we have to deal with boring regression testing or repeatedly throwing back pieces of code back to the developer because something was obviously wrong, but the really exciting moments for me are puzzles like this one; fuzzy ticket descriptions, false claims, obscure statements, contradictory or incomplete information, accusations and none really finds the time to dig deeper into the "forensics".

That is the moment where I cannot resist and love to jump in.

But, the most interesting finding in this story has not been betrayed yet. While looking at the duplicates, we noticed that all ended up with the character Q.

And when looking closer at the other non-duplicated codes, we noticed that actually ALL codes ended up with character Q. This was even more exciting. This fact reduced the number of possibilities from 1.6 million variants down to only 46656 and with it, the probability of duplicates.

At the end I could also prove that the old legacy program was not 100% free from creating duplicates even though it was close to impossible. The only way to force the old program to create duplicates was to flood one exemplary person with 46656 records, meaning all codes were used then. But that is such an unrealistic scenario, it was already pathetic.

At the end as it turned out, the problem was a configuration in a third party vendor's randomizer API function. In order to make the Randomizer create random values, you had to explicitly tell it to RANDOMIZE it. =;O) The title of this blog therefore could also be "Randomize the Randomizer" =;O)

Further notes for testers

Duplicate records can be easily identified using Excel by going to DataTools and choose "RemoveDuplicates".

Or, what was also helpful in my case was the online tool at

https://www.zickty.com/duplicatefinder

where you could post your full data set and it returned all duplicates.

Yet another alternative is use to SQL group command like in my case

SELECT COUNT(*) as num, mycode FROM table WHERE personid=xxx GROUP BY mycode ORDER BY num DESC;

Tuesday, April 16, 2024

AI and a confused elevator

A colleague recently received a letter from the real estate agent, stating that several people reported a malfunction of the new elevator. The reason as it turned out after an in depth-analysis: the doors were blocked by people moving into the building while hauling furniture. This special malfunction detection was claimed to be part of the new elevator systems that is based on artificial intelligence.

A colleague recently received a letter from the real estate agent, stating that several people reported a malfunction of the new elevator. The reason as it turned out after an in depth-analysis: the doors were blocked by people moving into the building while hauling furniture. This special malfunction detection was claimed to be part of the new elevator systems that is based on artificial intelligence.

The agent kindly asked the residents to NOT block the doors anymore as it confuses the elevator and it is likely for the elevator to stop working again.

I was thinking..."really"?

I mean...If I hear about AI in elevators, then the first thought that crosses my mind is smart traffic management [1]. For example, in our office building, at around noon, most of the employees go for lunch and call the elevators. An opportunity to do employees a great favor is to move back to the floor where people press the button right after having delivered the previous set of people. Or, if several elevators exist, make sure the elevators move to different positions in the building so people never have to wait too long to get one.

But, I had never expected an elevator to get irritated and distracted for several days for the case where someone temporarily blocks the doors. It is surprising to me that such elevator has no built in facility to automatically reset itself after a while. It's weird that a common use-case like blocking doors temporarily wasn't even in the technical reference book and required a technician to come twice as he/she could not resolve the problem the first time.

A few weeks later, I visited my friend in his new appartment and I wanted to also see that mystic elevator. D'oh! Another brand new elevator that does not allow to undo an incorrect choice made while clicking the wrong button. But, it contains an integrated iPad showing today's latest news.

Pardon me? Who needs that in a 4 floor building?

Often, I hear or read about software components where marketing claims they are using AI, whereas in reality the most obvious use-cases were not even considered like that undo button [2] which I'll probably miss in elevators till the end of my days.

References

[1] https://www.linkedin.com/pulse/elevators-artificial-intelligence-ascending-towards-safety-guillemi/

[2] https://simply-the-test.blogspot.com/2018/05/no-undo-in-elevator.html

Wednesday, November 29, 2023

Software made on Earth

This is a remake of my original cartoon which was published at SDTimes, N.Y. in their newsletter as of April 1, 2008

Wednesday, September 27, 2023

Revise the Test Report

I thought, I'd published this cartoon in 2020 already, but couldn't find it, so I am doing it now, with a 3 years delay...=;O)

I thought, I'd published this cartoon in 2020 already, but couldn't find it, so I am doing it now, with a 3 years delay...=;O)Saturday, August 5, 2023

Sunday, March 12, 2023

Lindy's Law in Test Automation

by T. J. Zelger, March 12, 2023

When I developed my first "robot" 20 years ago with the goal to automatically test the software so that my team didn't have to do the same manual tests every day, there were at most a handful of products enabling it. There were tools from well-known companies like IBM and Mercury (now HP), and these were extremely expensive. You didn't have much choice. Once you made a decision for a tool, it was almost impossible to revert that decision and go for another. It inevitably resulted in enormous extra costs.

A little later, a few interesting and cheaper alternatives emerged, such as RANOREX, an Austrian company that soon taught the big ones to fear because of their quality and an attractive value for money.

We also experimented with other products that we used for specific tasks and later replaced with other newer/better ones. Among these, I had a Canadian product on my focus which provided us with valuable services when testing a vehicle valuation calculation engine. As far as I know, the product no longer exists today and my memories are patchy, but I believe it was a forerunner of one of the open source systems that are widely used today or it may have been something similar.

20 years later, you will find a flood of even cheaper or free offers. A closer look reveals that most products are based on a few identical core modules. Selenium is currently one of the most popular "engines" on which most tools are based on. I also use Selenium, and because it's actually just an "engine", you have to develop additional methods and modules on top of it to make it a stable and easy to maintain test automation suite.

Nowadays, when building your own framework, most stick to the page-object model. However, we used to have a different approach. Our test data and instructions (action words) were kept in Excel. The idea was to enable testers with no programming skills to write automed tests. At the beginning we even thought, we could convince our business-analyts to maintain their own tests.

The idea to keep data and keywords out of the code was not new. It already existed at the time I was still working with IBM Rational Robot. For example, the SAFS Framework by Carl Nagle [1] was the first framework I learnt about following a similar approach or take TestFrame which is an implementation of Hans Buwalda's so called "Action Words" [2].

Our Excel based framework was quite a success within our headquarters in Switzerland and Germany and our plan to have testers write their own scripts without programming skills went well, but the maintenance of our framework wasn't quite that easy and it needed an expert to maintain all the UI locators and required extensions. This was sometimes a little too tricky for the non-techies. And, we never managed the business analysts to go for it.

Later (in a different company), I used the same approach but realized that it didn't have the same effect if you have testers WITH programming skills. Excel isn't seen cool enough to write automated tests and if you sell such approach to techies, they will raise their eye brows.

So, we removed the Excel part and integrated everything into NUNIT. That was easier to debug also.

And now? A newcomer called Cypress enters the market [3]. As I don't want to get stuck in sweet idleness, we are now starting a new adventure to see what it has to offer. We still keep our Selenium scripts, but they are going into a maintenance mode right now.

But, do we really have to follow each new fashion trend? Who guarantees that new stuff and ideas are really better than what we already have in place?

Fortunately, in my position as QA Manager, I can mostly set the goals myself in this area. If things are going well, you have the dilemma between "don't touch a running system" and ensuring we are not missing something.

Today, we use Selenium/C# with NUNIT for automated UI tests triggered daily by Jenkins. And, we have an automated test suite that fires requests on an interface level (below the UI) following the Test Automation Pyramid approach [4].

The problem: Everything works smooth since years! Why should I spend time to investigate alternatives?

I am thinking here of the Lindy's Law [5]. If something has proven itself for a long time, there is a high probability that it will continue to prove itself in the future. In my case, this applies in particular to these automated API tests, which are based on a framework we developed ourselves with the aim to keep the test scripts at the highest possible level of abstraction. The technical details such as authentication communication with the backend remain hidden. Also, instead of just having JSON based input/output, we are dealing with deserialized business objects.

We have at least 3000 automated tests that have identified bugs that were not caught in the deveoper's unit-tests. Simply said, these interface tests are a success story and I don't spend a second thinking about replacing it with a standard product. Why should we scan the market for "better" stuff that maybe isn't?

Because the examination of alternatives does not necessarily lead to the replacement of a tried and tested system. It can become a supplier of interesting and new ideas and extensions for the existing solution. Being open minded also helps recognizing the limits of one's own system and thus to check whether the current version can last not only in the near, but also far future and/or can be supplemented with one or the other useful feature.

The only constraint I am dealing with in this regard is my available time. But that's another story.

References:

[1] SAFS, Carl Nagle

[2] "Action Words" by Hans Buwalda, Software Test Automation (Fewster/Graham), Addison-Wesley

[3] https://www.testautomatisierung.org/testautomatisierung-cypress-vs-selenium/

[4] Test Automation Pyramid, Fowler, https://martinfowler.com/articles/practical-test-pyramid.html

[5] Lindy's Law by Albert Goldman, 1964, https://www.sciencedirect.com/science/article/abs/pii/S0378437117305964 and "Das Magazin", Nr. 10, 10-11. März 2023

Saturday, March 4, 2023

Saturday, February 4, 2023

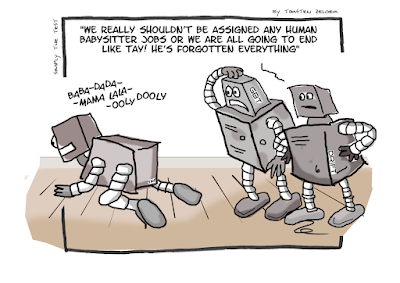

AI Adventures in Babysitting

I recently stumbled over an article about Microsoft's chatbot Tay which - after only 24 hours "training" turned into a more than questionable little "monster". The article was the inspiration for this one cartoon.

I recently stumbled over an article about Microsoft's chatbot Tay which - after only 24 hours "training" turned into a more than questionable little "monster". The article was the inspiration for this one cartoon.

Saturday, January 14, 2023

Time to illuminate

A crisis is a productive state. You simply need to get rid of its aftertaste of catastrophe.

Max Frisch.

Monday, January 2, 2023

Tuesday, April 5, 2022

The final rollout

Thursday, December 23, 2021

Cheerful debugging messages and its consequences

I don't know what came over me when I used "meow-meow" as the debugging text. Probably, Luna is to blame for it. Luna was our 16 year old cat which died shortly before. She's now eternalized in this (less testing related) cartoon.

We quickly found the cause for the missing signature, we fixed the bug and shipped it to the customer along with an updated template. Unfortunately, I forgot to remove the debugging text in the template. The customer found the problem during their internal BETA test. We fixed the template, shipped it, no big deal, over.

But, about half a year later, the customer reported that he'd seen a letter in the productive document management system containing the complimentary close "meow-meow" right below the printed signature.

I could feel my face becoming soaked with blood. How the heck could this happen again? Even though we don't execute all regression tests each time we ship a minor release or hotfix, the print-out is part of all smoke tests. Therefore, my thoughts were "this simply can't be true", 'cause we've never seen any such text being printed on any of the letters that ended on our printers.

A little investigation and it became soon clear what happened. Even though we had originally sent the correct template, there was still a copy of the old "meow-meow" template around. When someone decided to include the template into the git-repository, he took the wrong one. As a result, with the next minor software-version shipped, the old signature-template was deployed again.

While this explains how the bug was re-introduced, it does not explain, why testers did not find the issue while testing the print-out.

Further investigation revealed, even though ALL letters processed the "meow-meow" signature-template, NOT all letters were printing the text below the signature. We found out, it depended on several things such as the size of the signature and the configuration of the target letter being sent out. If there was a fixed size between signature and complimentary close, then the text "meow-meow" simply didn't make it in between and wasn't printed. If instead, the letter was configured to dynamically grow with the size of the signature, then also "meow-meow" made it to the printer.

Without going into too much details, only one particular type of letter was affected and fortunately, it was a letter that customers sent only to internal addresses (like an internal email) and not to stakeholders outside the company. Lucky me!

Well, not really...because...

..one of the customers told me that - despite this letter is sent only internally - it may still be used as an enclosure for other letters going out to lawyers and doctors. The company feared they'd lose credibility when such letters made it to their customers. It was therefore important to remove all identified documents in production before they pay a high price for this slip.

What are the lessons learnt?

First of all, it could have been worse. I mean, if you get a letter that ends with "meow-meow", it's likely to put a smile on your face. In my 20 years career as a tester and developer, I've seen comments and debugging texts that are much worse and sometimes below-the line. My friends told me several stories about similar happenings.

A

colleague told me, they had added an exhilarant test-text for their ATM.

The text was triggered when the card was about to get disabled. When in

production - their boss wanted to withdraw money from the machine, the

card got blocked and showed the text "You are a bad guy. That's why we

take your card away". I only know, he wasn't amused about it.

Looks like, we are not alone.

But, as we have just learnt, it depends on who is affected and who will read it. I can only suggest to never ever type anything like that in any of your tests because you never know where it's going to show up.

Instead of "meow-meow" a simple DOT or DASH would have done the same trick, and didn't raise the same kind of alarm in case such debugging messages make it to the bottom of a letter.

This was the last cartoon and blog entry for 2021. There are more to come next year. Please excuse, this cartoon here wasn't a pure testing related cartoon, but the story still is.

I wish you all a merry XMAS and a happy new year. I hope you enjoy the blog and continue to regularly visit me here.

Monday, April 26, 2021

Sunday, March 14, 2021

Monday, January 11, 2021

About Bias and Critical Thinking

I recently stumbled over a small article about the

"Semmelweis-Reflex". It was published in a Swiss magazine and it was

quite interesting as I drew some analogy to software testing:

I recently stumbled over a small article about the

"Semmelweis-Reflex". It was published in a Swiss magazine and it was

quite interesting as I drew some analogy to software testing:

In 1846, the Hungarian gynecologist Ignaz Semmelweis realized in a delivery unit that the rate of mothers dying in one department was 4 percent while in another within the same hospital, the rate was 10 per cent.

While analyzing the reason for it, he identified the department with the higher death rate was mainly operated by doctors which were also involved in performing post-mortem examination. Right after the autopsy they went helping mothers without properly disinfecting their hands.

The other department with the lower death rate was maintained mainly by midwifes who were not involved in any such autopsy. Based on this observation, Semmelweis adviced all employees to disinfect their hands with chlorinated lime.

The consequence: Death rate decreased remarkably.

Despite clear evidence, the employees' reaction remained sceptical, some were even hostile. Traditional believes were stronger than empiricism.

Even though this is more than 150 years ago, people haven’t changed so much in these days. We are still biasing ourselves a lot. The tendency to reject new arguments that do not match our current beliefs is still common today. It is known as the Semmelweis-Reflex. We all have our own experience and convictions. This is all fine, but it is important to understand, these are personal convictions and may not be transferred to a general truth.

Similarly, John Kenneth Galbraith (Canadian-American economist, 1908-2006) wrote: "In the choice between changing one's mind and proving that there is no need to do so, almost everybody gets busy on the proof". This quote highlights a common human tendency to defend existing beliefs rather than adapt to new information or perspectives.

How can we fight such bias? Be curious! If you spontaneously react with antipathy on something new, force yourself to find pieces in the presenter’s arguments that could still be interesting, despite your current disbelieve as a whole.

Second, make it a common practice to question yourself by telling yourself “I might be wrong”. This attitude helps overcome prejudice by allowing new information getting considered and processed.

Back to testing:

From this article, I am learning we should start to listen and not hide behind dismissive arguments simply because what is told to us doesn't match our current view of the world.

But this story has two sides. If I am getting biased, then others may be biased, too. Not all information reaching my desk, can be considered right by default. The presenter's view of the world may be equally limited.

Plus, the presenter may have a goal. His/her intention is to sell us something. The presenter's view may be wrong and based on "sloppy" analysis if any fact collection was done at all.

Call me a doubting Thomas, but I don't believe anything, until I've seen the facts.

So what?

|

If someone tells you "a user will never do that", question it! |

|

It may be wrong.

|

|

If someone tells you "users typically do it this way", question it! |

|

It may be an assumption and not a fact.

|

|

If someone tells you "this can't happen", question it! |

|

Maybe she has just not experienced it yet and will happen soon.

|

|

If someone tells you "we didn't change anything", question it! |

|

One line of code change is often considered as hardly anything changed at all, but in fact this adaptation can be the root of a new severe bug or a disaster. |

I trust in facts only, or, as someone said: "in God we trust, the rest we test". This is the result of many years testing software. Come-on, I don't even trust my own piece of code. I must be kind of a maniac!

Sources: text translated to English and summarized from original article published as "Warum wir die Wahrheit nicht hören wollen», by Krogerus & Tschäppeler, Magazin, Switzerland, March 20, 2021